Ultimate Image Compression Guide: Reduce File Size Without Quality Loss 2025

In today's digital landscape, where web performance directly impacts user experience, search rankings, and conversion rates, image compression has evolved from a technical nice-to-have into an essential optimization strategy. With images accounting for over 60% of average webpage weight, mastering compression techniques can dramatically improve your site's loading speed and user engagement.

This comprehensive guide reveals the professional strategies, advanced techniques, and cutting-edge tools that allow you to achieve dramatic file size reductions—often 70-80%—while maintaining the visual quality your audience expects. Whether you're optimizing product photos for e-commerce, preparing blog images for faster loading, or processing thousands of images for a digital campaign, these proven methods will transform your workflow.

Understanding Image Compression Fundamentals

The Science Behind Compression

How Compression Works: Image compression exploits redundancy and human visual perception limitations to reduce file sizes. Every digital image contains:

- Spatial redundancy: Adjacent pixels often have similar values

- Spectral redundancy: Color channels frequently correlate

- Temporal redundancy: In image sequences, frames share common elements

- Psychovisual redundancy: Details the human eye cannot perceive

Compression Efficiency Formula:

Compression Ratio = Original File Size ÷ Compressed File Size

Space Savings = (1 - Compressed Size ÷ Original Size) × 100%

Example:

Original: 2.5 MB → Compressed: 0.5 MB

Compression Ratio: 5:1

Space Savings: 80%

Lossy vs. Lossless Compression Deep Dive

Lossless Compression:

Advantages:

✓ Perfect quality preservation

✓ Reversible process

✓ Ideal for text, line art, logos

✓ Professional archival standard

Disadvantages:

✗ Limited compression ratios (typically 2:1 to 4:1)

✗ Larger file sizes than lossy

✗ Not optimal for photographs

✗ Higher bandwidth requirements

Best Use Cases:

- Logo files and graphics with text

- Medical and scientific imagery

- Screenshots and interface elements

- Images requiring pixel-perfect accuracy

Lossy Compression:

Advantages:

✓ Dramatic size reductions (10:1 to 100:1 ratios)

✓ Excellent for photographs

✓ Faster loading times

✓ Bandwidth-efficient

Disadvantages:

✗ Irreversible quality loss

✗ Potential visible artifacts

✗ Generational degradation

✗ Requires quality balancing

Best Use Cases:

- Photographs and natural images

- Web graphics and thumbnails

- Social media content

- Large image collections

Real-World Performance Comparison:

Test Image: 4000×3000 digital photograph (Original: 8.2 MB)

Lossless PNG: 6.1 MB (26% reduction)

PNG with optimization: 4.8 MB (41% reduction)

JPEG Quality 95%: 2.1 MB (74% reduction)

JPEG Quality 85%: 1.2 MB (85% reduction)

JPEG Quality 75%: 0.8 MB (90% reduction)

WebP Quality 85%: 0.9 MB (89% reduction)

WebP Quality 75%: 0.6 MB (93% reduction)

Format-Specific Compression Strategies

JPEG Optimization Mastery

Quality Settings Deep Analysis:

Quality 100%: Minimal compression, largest files

- Use case: Never recommended for web

- Artifacts: None visible

- File size: 95-100% of original

Quality 95%: High quality with slight compression

- Use case: Print-ready images, professional portfolios

- Artifacts: Virtually imperceptible

- File size: 60-80% of original

Quality 85%: Optimal balance for most uses

- Use case: Web hero images, product photos

- Artifacts: None to minimal in most images

- File size: 40-60% of original

Quality 75%: Standard web optimization

- Use case: Content images, blog photos

- Artifacts: Slight softening in detailed areas

- File size: 30-45% of original

Quality 65%: Aggressive compression

- Use case: Thumbnails, low-priority images

- Artifacts: Noticeable in detailed areas

- File size: 20-35% of original

Quality 50%: Very aggressive compression

- Use case: Fast-loading previews only

- Artifacts: Visible blocking and softening

- File size: 15-25% of original

Advanced JPEG Techniques:

bash# Progressive JPEG encoding for better perceived performance magick input.jpg -interlace plane -quality 85 progressive_output.jpg # Optimized JPEG with custom sampling magick input.jpg -sampling-factor 4:2:0 -quality 85 optimized_output.jpg # Advanced optimization with mozjpeg cjpeg -quality 85 -progressive -optimize input.jpg > optimized_output.jpg

Chroma Subsampling Optimization:

4:4:4 (No subsampling):

- Preserves full color information

- Largest file sizes

- Use for images with important color details

4:2:2 (Moderate subsampling):

- Reduces horizontal color resolution by half

- 25-30% size reduction

- Good balance for most photographs

4:2:0 (Standard subsampling):

- Reduces color resolution in both dimensions

- 40-50% size reduction

- Default for web images, excellent results

PNG Compression Excellence

PNG Optimization Techniques:

bash# Advanced PNG optimization pipeline optimize_png() { local input="$1" local output="$2" # Step 1: Reduce color palette if possible pngquant --quality=80-95 --ext .png --force "$input" # Step 2: Optimize compression optipng -o7 "$input" # Step 3: Further size reduction advpng -z -4 "$input" # Step 4: Final optimization pngcrush -reduce -brute "$input" "$output" }

Color Depth Optimization:

PNG-24 (True Color):

- 16.7 million colors

- Largest file sizes

- Use when color accuracy is critical

PNG-8 (Indexed Color):

- Up to 256 colors

- Significantly smaller files

- Excellent for graphics with limited color palettes

PNG-8 with Dithering:

- Simulates additional colors through patterns

- Good compromise between size and quality

- Effective for gradients and photographs

Alpha Channel Considerations:

javascript// Analyze PNG alpha channel usage function analyzePNGAlpha(imageData) { const canvas = document.createElement('canvas'); const ctx = canvas.getContext('2d'); canvas.width = imageData.width; canvas.height = imageData.height; ctx.putImageData(imageData, 0, 0); const pixels = ctx.getImageData(0, 0, canvas.width, canvas.height).data; let hasTransparency = false; let hasPartialTransparency = false; for (let i = 3; i < pixels.length; i += 4) { const alpha = pixels[i]; if (alpha === 0) hasTransparency = true; if (alpha > 0 && alpha < 255) hasPartialTransparency = true; } return { hasTransparency, hasPartialTransparency, recommendation: getFormatRecommendation(hasTransparency, hasPartialTransparency) }; } function getFormatRecommendation(hasTransparency, hasPartialTransparency) { if (!hasTransparency && !hasPartialTransparency) { return 'Convert to JPEG for smaller file size'; } else if (hasTransparency && !hasPartialTransparency) { return 'Consider PNG-8 with binary transparency'; } else { return 'PNG-24 required for alpha transparency'; } }

Next-Generation Formats

WebP Optimization:

bash# WebP compression with optimal settings compress_to_webp() { local input="$1" local output="$2" local quality="${3:-85}" # For photographs cwebp -q "$quality" -m 6 -segments 4 -size 200000 "$input" -o "$output" # For graphics with sharp edges cwebp -q "$quality" -m 6 -alpha_q 95 "$input" -o "$output" # Lossless WebP for perfect quality cwebp -lossless -z 9 "$input" -o "${output%.webp}_lossless.webp" }

AVIF Implementation:

bash# AVIF encoding for maximum compression avifenc --min 0 --max 63 --speed 6 --jobs 4 input.jpg output.avif # Quality-based AVIF encoding avifenc --quality 80 --speed 6 input.jpg output_q80.avif

Format Comparison Matrix:

Format | Compression | Quality | Browser Support | Best Use Case

-------|-------------|---------|-----------------|---------------

JPEG | Excellent | Good | Universal | Photographs

PNG | Moderate | Perfect | Universal | Graphics/Alpha

WebP | Superior | Excellent| 96%+ browsers | Modern web

AVIF | Best | Excellent| 70%+ browsers | Cutting-edge

HEIF | Excellent | Superior | Limited | Apple ecosystem

Professional Compression Workflows

Quality Assessment Framework

Objective Quality Metrics:

pythonimport cv2 import numpy as np from skimage.metrics import structural_similarity as ssim def calculate_image_quality_metrics(original_path, compressed_path): # Load images original = cv2.imread(original_path) compressed = cv2.imread(compressed_path) # Convert to grayscale for some metrics original_gray = cv2.cvtColor(original, cv2.COLOR_BGR2GRAY) compressed_gray = cv2.cvtColor(compressed, cv2.COLOR_BGR2GRAY) # Calculate PSNR (Peak Signal-to-Noise Ratio) psnr = cv2.PSNR(original, compressed) # Calculate SSIM (Structural Similarity Index) ssim_score = ssim(original_gray, compressed_gray) # Calculate MSE (Mean Squared Error) mse = np.mean((original - compressed) ** 2) # File size comparison original_size = os.path.getsize(original_path) compressed_size = os.path.getsize(compressed_path) compression_ratio = original_size / compressed_size return { 'psnr': psnr, 'ssim': ssim_score, 'mse': mse, 'compression_ratio': compression_ratio, 'size_reduction_percent': (1 - compressed_size/original_size) * 100 } # Quality interpretation guidelines def interpret_quality_metrics(metrics): psnr = metrics['psnr'] ssim = metrics['ssim'] if psnr > 40 and ssim > 0.95: return "Excellent quality - virtually indistinguishable" elif psnr > 30 and ssim > 0.9: return "Very good quality - minor differences" elif psnr > 25 and ssim > 0.8: return "Good quality - acceptable for most uses" elif psnr > 20 and ssim > 0.7: return "Fair quality - noticeable but usable" else: return "Poor quality - significant degradation"

Visual Quality Assessment:

javascript// Browser-based quality comparison tool class ImageQualityComparer { constructor(originalImage, compressedImage) { this.original = originalImage; this.compressed = compressedImage; this.canvas = document.createElement('canvas'); this.ctx = this.canvas.getContext('2d'); } calculateDifference() { const width = Math.max(this.original.width, this.compressed.width); const height = Math.max(this.original.height, this.compressed.height); this.canvas.width = width; this.canvas.height = height; // Draw original this.ctx.drawImage(this.original, 0, 0, width, height); const originalData = this.ctx.getImageData(0, 0, width, height); // Draw compressed this.ctx.drawImage(this.compressed, 0, 0, width, height); const compressedData = this.ctx.getImageData(0, 0, width, height); // Calculate pixel differences const diffData = this.ctx.createImageData(width, height); let totalDifference = 0; for (let i = 0; i < originalData.data.length; i += 4) { const rDiff = Math.abs(originalData.data[i] - compressedData.data[i]); const gDiff = Math.abs(originalData.data[i + 1] - compressedData.data[i + 1]); const bDiff = Math.abs(originalData.data[i + 2] - compressedData.data[i + 2]); const avgDiff = (rDiff + gDiff + bDiff) / 3; totalDifference += avgDiff; // Highlight differences in red diffData.data[i] = Math.min(255, avgDiff * 3); // Red diffData.data[i + 1] = 0; // Green diffData.data[i + 2] = 0; // Blue diffData.data[i + 3] = Math.min(255, avgDiff * 2); // Alpha } const averageDifference = totalDifference / (originalData.data.length / 4); return { averageDifference, diffImageData: diffData, qualityScore: Math.max(0, 100 - averageDifference) }; } }

Automated Compression Pipeline

Intelligent Compression System:

pythonimport os import cv2 from PIL import Image, ImageEnhance import subprocess class IntelligentImageCompressor: def __init__(self): self.quality_thresholds = { 'high': {'psnr_min': 35, 'ssim_min': 0.95, 'max_size_kb': 500}, 'medium': {'psnr_min': 30, 'ssim_min': 0.9, 'max_size_kb': 300}, 'low': {'psnr_min': 25, 'ssim_min': 0.8, 'max_size_kb': 150} } def analyze_image_content(self, image_path): """Analyze image to determine optimal compression strategy""" img = cv2.imread(image_path) # Calculate image complexity metrics gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) # Edge density (high = complex image) edges = cv2.Canny(gray, 50, 150) edge_density = np.sum(edges > 0) / edges.size # Color diversity unique_colors = len(np.unique(img.reshape(-1, img.shape[-1]), axis=0)) color_complexity = unique_colors / (img.shape[0] * img.shape[1]) # Noise level estimation noise_level = cv2.Laplacian(gray, cv2.CV_64F).var() # Determine image type if edge_density > 0.1 and color_complexity < 0.01: return 'graphic' # Logos, text, simple graphics elif edge_density < 0.05 and noise_level < 500: return 'smooth' # Simple photos, gradients else: return 'complex' # Detailed photographs def determine_optimal_settings(self, image_path, target_quality, target_size_kb=None): """Determine optimal compression settings based on image analysis""" content_type = self.analyze_image_content(image_path) settings = { 'graphic': { 'format': 'png', 'png_quality': 90, 'jpeg_quality': 95, 'webp_quality': 95 }, 'smooth': { 'format': 'jpeg', 'jpeg_quality': 85, 'webp_quality': 80, 'progressive': True }, 'complex': { 'format': 'jpeg', 'jpeg_quality': 80, 'webp_quality': 75, 'progressive': True } } base_settings = settings[content_type] # Adjust based on target quality if target_quality == 'high': base_settings['jpeg_quality'] = min(95, base_settings['jpeg_quality'] + 10) base_settings['webp_quality'] = min(95, base_settings['webp_quality'] + 10) elif target_quality == 'low': base_settings['jpeg_quality'] = max(60, base_settings['jpeg_quality'] - 15) base_settings['webp_quality'] = max(60, base_settings['webp_quality'] - 15) return base_settings def compress_with_target_size(self, input_path, output_path, target_size_kb): """Compress image to meet specific file size target""" # Binary search for optimal quality min_quality = 20 max_quality = 95 best_quality = max_quality while min_quality <= max_quality: current_quality = (min_quality + max_quality) // 2 # Test compression temp_output = f"temp_{current_quality}.jpg" subprocess.run([ 'magick', input_path, '-quality', str(current_quality), temp_output ], capture_output=True) # Check file size if os.path.exists(temp_output): file_size_kb = os.path.getsize(temp_output) / 1024 if file_size_kb <= target_size_kb: best_quality = current_quality min_quality = current_quality + 1 else: max_quality = current_quality - 1 os.remove(temp_output) else: break # Final compression with best quality subprocess.run([ 'magick', input_path, '-quality', str(best_quality), output_path ]) return best_quality def batch_compress(self, input_dir, output_dir, settings): """Batch compress images with intelligent optimization""" os.makedirs(output_dir, exist_ok=True) results = [] for filename in os.listdir(input_dir): if filename.lower().endswith(('.jpg', '.jpeg', '.png', '.tiff')): input_path = os.path.join(input_dir, filename) # Determine optimal settings for this image optimal_settings = self.determine_optimal_settings( input_path, settings['target_quality'] ) # Process image name, ext = os.path.splitext(filename) output_path = os.path.join(output_dir, f"{name}_compressed{ext}") # Apply compression if settings.get('target_size_kb'): quality_used = self.compress_with_target_size( input_path, output_path, settings['target_size_kb'] ) else: quality_used = optimal_settings['jpeg_quality'] subprocess.run([ 'magick', input_path, '-quality', str(quality_used), output_path ]) # Calculate results original_size = os.path.getsize(input_path) compressed_size = os.path.getsize(output_path) compression_ratio = original_size / compressed_size results.append({ 'filename': filename, 'original_size_kb': original_size / 1024, 'compressed_size_kb': compressed_size / 1024, 'compression_ratio': compression_ratio, 'size_reduction_percent': (1 - compressed_size/original_size) * 100, 'quality_used': quality_used, 'content_type': self.analyze_image_content(input_path) }) return results # Usage example compressor = IntelligentImageCompressor() results = compressor.batch_compress( 'input_images/', 'compressed_images/', { 'target_quality': 'medium', 'target_size_kb': 200 } )

Advanced Compression Techniques

Perceptual Optimization

Human Visual System Considerations:

pythondef perceptual_compression_optimization(image_path): """Optimize compression based on human visual perception""" img = cv2.imread(image_path) hsv = cv2.cvtColor(img, cv2.COLOR_BGR2HSV) # Identify regions of interest # 1. Face detection for preserving important areas face_cascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml') faces = face_cascade.detectMultiScale(cv2.cvtColor(img, cv2.COLOR_BGR2GRAY), 1.1, 4) # 2. Edge detection for detail preservation edges = cv2.Canny(cv2.cvtColor(img, cv2.COLOR_BGR2GRAY), 50, 150) # 3. Color variance analysis color_variance = np.var(img, axis=(0, 1)) # Create importance map importance_map = np.zeros(img.shape[:2], dtype=np.float32) # Higher importance for faces for (x, y, w, h) in faces: importance_map[y:y+h, x:x+w] += 0.5 # Higher importance for edges importance_map += edges / 255.0 * 0.3 # Apply variable quality based on importance quality_map = 60 + (importance_map * 35) # Quality range: 60-95 return quality_map def apply_variable_quality_compression(image_path, quality_map, output_path): """Apply variable quality compression based on importance map""" # This would require custom JPEG encoder implementation # For practical use, segment image into regions with different quality levels img = cv2.imread(image_path) height, width = img.shape[:2] # Divide image into tiles tile_size = 64 compressed_tiles = [] for y in range(0, height, tile_size): for x in range(0, width, tile_size): # Extract tile tile = img[y:y+tile_size, x:x+tile_size] # Get average quality for this tile tile_quality = np.mean(quality_map[y:y+tile_size, x:x+tile_size]) # Compress tile with specific quality _, encoded = cv2.imencode('.jpg', tile, [cv2.IMWRITE_JPEG_QUALITY, int(tile_quality)]) decoded_tile = cv2.imdecode(encoded, cv2.IMREAD_COLOR) compressed_tiles.append((x, y, decoded_tile)) # Reconstruct image from tiles result = np.zeros_like(img) for x, y, tile in compressed_tiles: h, w = tile.shape[:2] result[y:y+h, x:x+w] = tile cv2.imwrite(output_path, result)

Content-Aware Compression

Region-Based Optimization:

javascript// Browser-based content-aware compression class ContentAwareCompressor { constructor(canvas) { this.canvas = canvas; this.ctx = canvas.getContext('2d'); } analyzeImageRegions(imageData) { const width = imageData.width; const height = imageData.height; const data = imageData.data; // Analyze each region for complexity const regionSize = 32; const regions = []; for (let y = 0; y < height; y += regionSize) { for (let x = 0; x < width; x += regionSize) { const region = this.extractRegion(data, x, y, regionSize, width, height); const complexity = this.calculateRegionComplexity(region); regions.push({ x, y, width: Math.min(regionSize, width - x), height: Math.min(regionSize, height - y), complexity, recommendedQuality: this.getQualityForComplexity(complexity) }); } } return regions; } extractRegion(data, startX, startY, size, imageWidth, imageHeight) { const region = []; const endX = Math.min(startX + size, imageWidth); const endY = Math.min(startY + size, imageHeight); for (let y = startY; y < endY; y++) { for (let x = startX; x < endX; x++) { const index = (y * imageWidth + x) * 4; region.push([ data[index], // R data[index + 1], // G data[index + 2], // B data[index + 3] // A ]); } } return region; } calculateRegionComplexity(region) { // Calculate color variance const colors = { r: [], g: [], b: [] }; region.forEach(pixel => { colors.r.push(pixel[0]); colors.g.push(pixel[1]); colors.b.push(pixel[2]); }); const variance = { r: this.calculateVariance(colors.r), g: this.calculateVariance(colors.g), b: this.calculateVariance(colors.b) }; const avgVariance = (variance.r + variance.g + variance.b) / 3; // Calculate edge density const edgeDensity = this.calculateEdgeDensity(region); // Combine metrics for overall complexity score return (avgVariance / 255 * 0.6 + edgeDensity * 0.4); } calculateVariance(values) { const mean = values.reduce((sum, val) => sum + val, 0) / values.length; const squaredDiffs = values.map(val => Math.pow(val - mean, 2)); return squaredDiffs.reduce((sum, val) => sum + val, 0) / values.length; } calculateEdgeDensity(region) { // Simplified edge detection for region let edgeCount = 0; const regionSize = Math.sqrt(region.length); for (let i = 0; i < region.length - regionSize - 1; i++) { const current = region[i]; const right = region[i + 1]; const below = region[i + regionSize]; if (right && this.colorDistance(current, right) > 30) edgeCount++; if (below && this.colorDistance(current, below) > 30) edgeCount++; } return edgeCount / region.length; } colorDistance(color1, color2) { return Math.sqrt( Math.pow(color1[0] - color2[0], 2) + Math.pow(color1[1] - color2[1], 2) + Math.pow(color1[2] - color2[2], 2) ); } getQualityForComplexity(complexity) { // Higher complexity = higher quality needed return Math.max(60, Math.min(95, 60 + complexity * 35)); } }

Tool Integration and Practical Applications

Professional Compression Tools

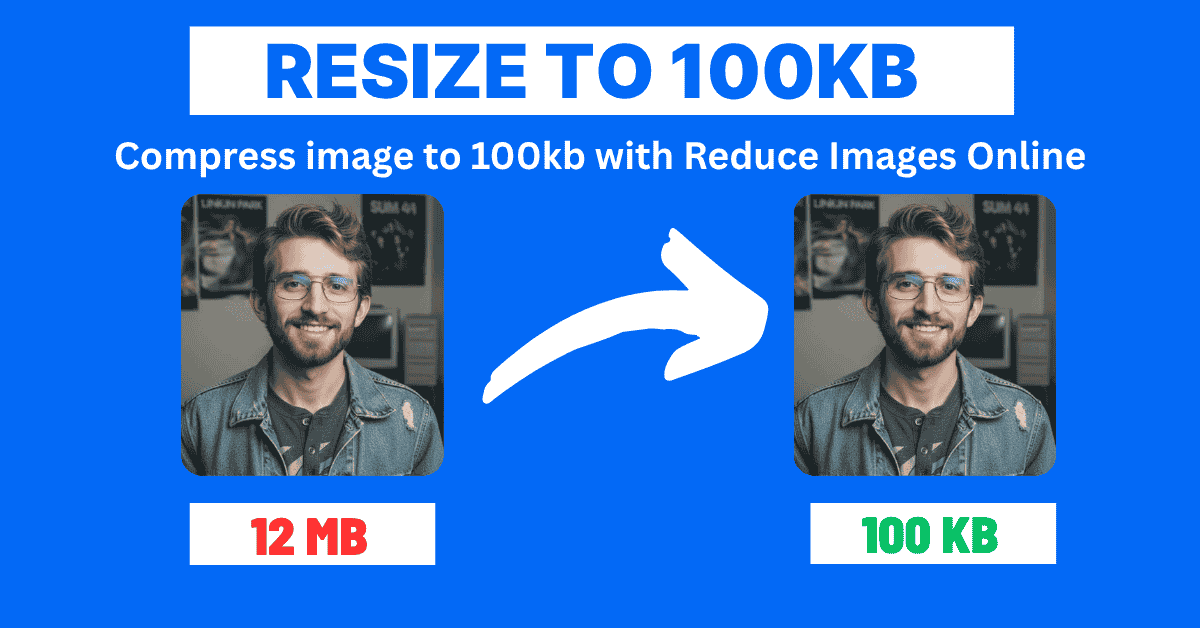

ReduceImages.online Integration: Our advanced image compression tool combines multiple optimization techniques for superior results:

javascript// Example integration with ReduceImages.online workflow class ReduceImagesIntegration { constructor() { this.apiEndpoint = 'https://reduceimages.online/api/compress'; this.supportedFormats = ['jpeg', 'png', 'webp']; } async compressImage(file, options = {}) { const formData = new FormData(); formData.append('image', file); formData.append('quality', options.quality || 80); formData.append('format', options.format || 'auto'); formData.append('maxSizeKB', options.maxSizeKB || null); try { const response = await fetch(this.apiEndpoint, { method: 'POST', body: formData }); const result = await response.json(); return { compressedFile: result.compressedFile, originalSize: result.originalSize, compressedSize: result.compressedSize, compressionRatio: result.compressionRatio, quality: result.qualityUsed }; } catch (error) { console.error('Compression failed:', error); throw error; } } async batchCompress(files, options = {}) { const results = []; for (const file of files) { try { const result = await this.compressImage(file, options); results.push({ success: true, file: file.name, ...result }); } catch (error) { results.push({ success: false, file: file.name, error: error.message }); } } return results; } } // Usage example const compressor = new ReduceImagesIntegration(); // Single image compression const compressedResult = await compressor.compressImage(imageFile, { quality: 85, format: 'webp', maxSizeKB: 200 }); // Batch compression for efficiency const batchResults = await compressor.batchCompress(imageFiles, { quality: 80, maxSizeKB: 150 });

Command Line Workflow Integration:

bash#!/bin/bash # Professional compression workflow script # Configuration INPUT_DIR="./source_images" OUTPUT_DIR="./compressed_images" QUALITY_HIGH=90 QUALITY_MEDIUM=80 QUALITY_LOW=65 MAX_SIZE_KB=200 # Create output directories mkdir -p "$OUTPUT_DIR"/{high,medium,low} # Function to compress with size target compress_to_size() { local input="$1" local output="$2" local max_size="$3" local quality="$4" # Initial compression magick "$input" -quality "$quality" "$output" # Check if size target is met current_size=$(stat -c%s "$output") current_size_kb=$((current_size / 1024)) # If too large, reduce quality iteratively while [ "$current_size_kb" -gt "$max_size" ] && [ "$quality" -gt 30 ]; do quality=$((quality - 5)) magick "$input" -quality "$quality" "$output" current_size=$(stat -c%s "$output") current_size_kb=$((current_size / 1024)) done echo "Compressed $input to ${current_size_kb}KB at quality $quality" } # Process each image for image in "$INPUT_DIR"/*.{jpg,jpeg,png,tiff}; do if [[ -f "$image" ]]; then filename=$(basename "$image") name="${filename%.*}" echo "Processing: $filename" # High quality version compress_to_size "$image" "$OUTPUT_DIR/high/${name}.jpg" 500 $QUALITY_HIGH # Medium quality version compress_to_size "$image" "$OUTPUT_DIR/medium/${name}.jpg" $MAX_SIZE_KB $QUALITY_MEDIUM # Low quality version compress_to_size "$image" "$OUTPUT_DIR/low/${name}.jpg" 100 $QUALITY_LOW # WebP versions for modern browsers magick "$image" -quality $QUALITY_MEDIUM "$OUTPUT_DIR/medium/${name}.webp" # Calculate savings original_size=$(stat -c%s "$image") compressed_size=$(stat -c%s "$OUTPUT_DIR/medium/${name}.jpg") savings=$(( (original_size - compressed_size) * 100 / original_size )) echo " Savings: ${savings}% ($(( original_size / 1024 ))KB → $(( compressed_size / 1024 ))KB)" fi done echo "Compression complete!"

Related Articles and Further Reading

This comprehensive compression guide connects with our other optimization resources:

- How to Compress Photos Without Losing Quality - Detailed techniques for quality preservation

- 10 Simple Ways to Reduce Image Size Without Losing Quality - Quick optimization methods

- Complete Guide to Image Resizing - Master image dimensioning and scaling

- Resize Images for Web: Best Practices and Tools - Web-specific optimization strategies

Conclusion

Mastering image compression is essential for modern web performance and user experience. The techniques and strategies outlined in this guide provide a comprehensive foundation for achieving dramatic file size reductions while maintaining visual quality that meets professional standards.

Key Takeaways:

- Format selection matters: Choose JPEG for photographs, PNG for graphics, WebP for modern browsers

- Quality settings require balance: 80-85% typically provides optimal quality-to-size ratio

- Content analysis improves results: Different image types require different optimization approaches

- Automation saves time: Batch processing and intelligent workflows scale your optimization efforts

- Continuous monitoring ensures performance: Regular testing and optimization maintain competitive advantage

Performance Impact: Proper image compression can reduce page load times by 50-80%, improve Core Web Vitals scores, and significantly enhance user engagement and conversion rates.

Transform your image optimization workflow with our professional compression tools. Experience the perfect balance of quality and performance that keeps your users engaged and your site fast.

Ready to master image compression? Explore our specialized guides for format-specific optimization and discover the tools that make professional compression accessible to everyone.